The Hidden AI Advantage Established Companies Have Over Startups

AI makes it easy to publish. It also makes it easy to publish nothing.

The scary part is not that people use AI. The scary part is how quickly you can become a slop factory without noticing. The output is fluent, tidy, plausible. It sounds like writing. But if you read it back a week later, you cannot remember a single thing it said.

I have been thinking about this a lot lately, because I am standing in both worlds right now.

The obvious advantages, plus one I didn't expect

Everyone knows established companies have advantages. Distribution. Trust. Backlinks. Brand recognition. A customer base that already knows who you are.

But here is the advantage I keep seeing in 2026 that nobody talks about:

Established companies can get better output from AI with less effort.

Not because they have more money or better prompts. Because they already have a real archive. Old blog posts. Product decisions that got documented somewhere. Support conversations. Internal docs. Dumb mistakes they learned from. Strong opinions they formed over years of actually doing the work.

When you ask AI for help, you can anchor it with "here is how we talk" and "here is what we believe" and "here is what we have already said." You have receipts.

New companies do not have that. And the difference is obvious when you're trying to write something new alongside an LLM.

What actually happens

If you do not feed AI "you," it will feed you "everyone."

That's where it goes wrong. Your voice becomes the average of the model's training data plus the top 10 Google results for whatever you are writing about. And you cannot even tell it is happening, because the output reads fine. It is grammatically correct. It has structure. It makes points.

It is also completely forgettable.

This is not a problem you can prompt your way out of. You cannot tell Claude "be more original" and expect it to suddenly develop opinions it does not have access to. The model needs raw material. It needs your perspective, your examples, your way of explaining things. Without that, it does what any good assistant would do: it guesses what a reasonable person might say.

The result is content that could have come from anyone. Which means it might as well have come from no one.

I'm living this right now

I am the co-founder of PreProduct, a company that has been around for over five years. We have shipped things, argued about things, written a lot, changed our minds publicly. There is a trail of evidence behind what we do and why we do it.

I am also building Context Link, which is new. There is no five-year archive. There is no corpus of "this is what we believe" sitting around waiting to be referenced.

The difference is pretty obvious once you're doing both.

At the older company, AI feels like a multiplier. I can ask it to draft something and give it three old blog posts as examples, or use Context Link itself to pull in specific snippets from our previous posts and docs most relevant to the topic at hand. The output sounds like us, because it has something real to work from.

At the new company, AI feels like a temptation. A temptation to fake certainty too early. To sound confident about things I have not actually figured out yet. To publish something that reads like a company with opinions, when really I am still forming them.

That doesn't mean avoiding AI. It means being pickier about what you let it assert before you've figured out your position.

Why established companies have it easier

They have "free context" sitting around.

The voice is already shaped by years of writing. The opinions are already formed through real tradeoffs. The examples are real, not imagined.

So when you ask AI for a draft, you can anchor it with:

- "Here is how we talk" (old blog posts, docs, emails)

- "Here is what we believe" (positioning docs, internal memos)

- "Here is what we have already said" (past content on this topic)

- "Here are receipts" (customer quotes, support threads, changelog entries)

- "Here is our technical documentation on what is write/wrong/advisable" (docs portal)

This is not about having more content. It is about having content that is genuinely yours. Content that reflects decisions you actually made and opinions you actually hold.

New companies can have lots of content too. But if that content was generated before the company had real opinions, it is just noise. Worse, it is noise that will shape future AI outputs, creating a feedback loop of generic takes.

The catch

Here is where it gets tricky: models cannot hold your whole company in their head.

Context windows are finite. You cannot just dump "the entire company" into every prompt. Even if you could, the model would get confused about what matters.

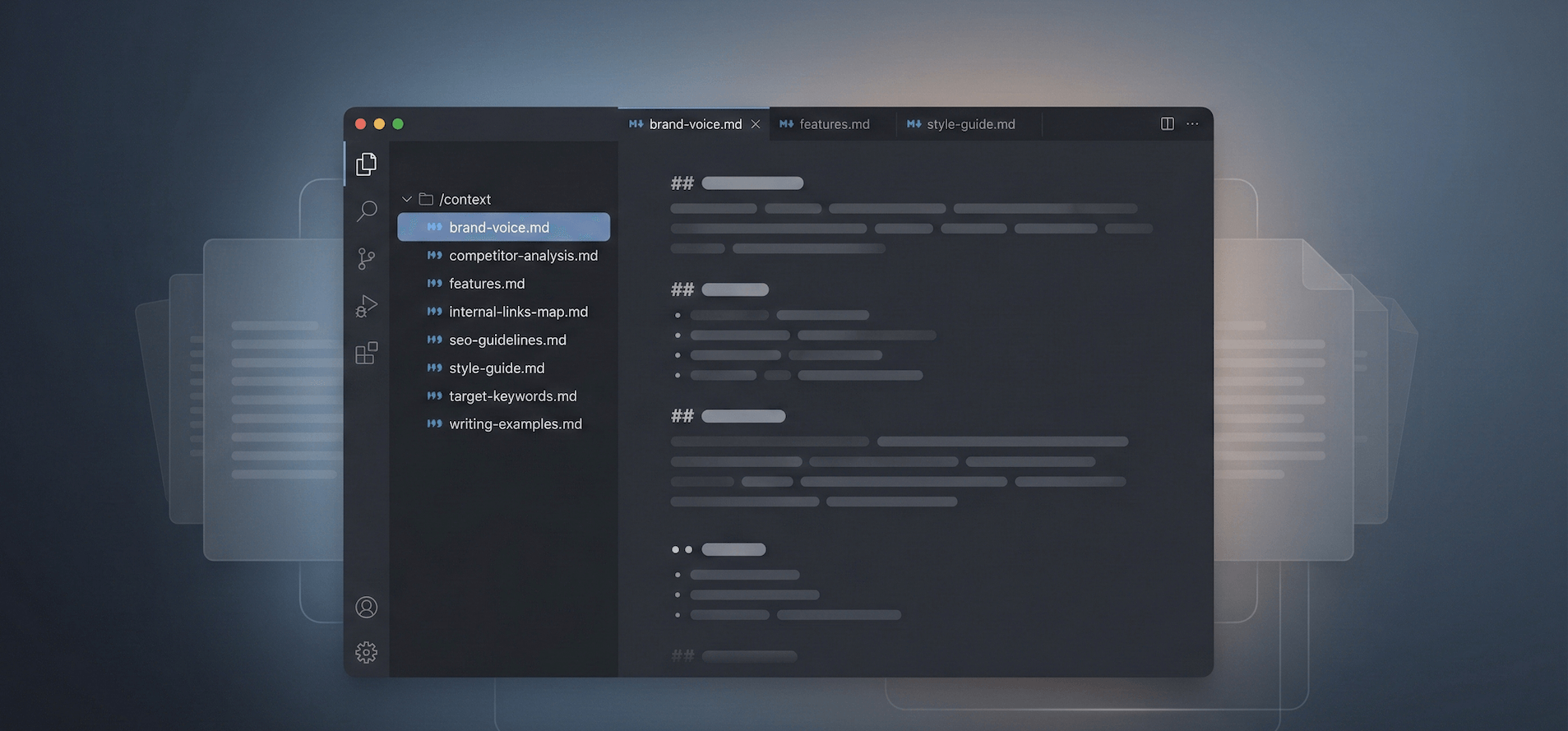

Which means the real skill becomes curation. Having the right few docs always ready. Plus a way to pull in extra context when needed.

This is where most people go wrong. They try to cram the entire library into the instruction prompt. But instruction docs should be short and boring. They should contain the rules, not the evidence.

A simple split that works:

Layer 1: Instruction prompt (always-on) (policies, voice, positioning)

These are small, stable, and rarely change. They tell the AI how to behave.

Layer 2: Primary sources you pull in on demand

These are the receipts. Customer quotes. Support threads. Changelog entries. Internal decision notes. Screenshots, numbers, examples. The stuff that makes you sound real.

A handy rule of thumb: keep the rules in the prompt, keep the receipts in the archive.

Or to put it another way: instruction docs should contain policies. Primary sources should contain proof.

Most AI writing is bad because it is built on secondary summaries. The moment you ground it in primary sources, it stops sounding like everyone else.

Context files

Every company has a set of docs they wish everyone read before writing anything. What we do. Who it is for. What we believe and what we do not. How we sound. Examples of great writing we have done before. The "please stop saying this" list.

These docs matter way more now because AI will follow them instantly if they exist.

And it will confidently improvise if they do not.

The difference between a company with good context files and one without is the difference between AI that sounds like you and AI that sounds like a press release written by committee.

This is not complicated to set up. It is just maintenance. The hard part is actually writing down what you believe clearly enough that a model can follow it. Which, it turns out, is also the hard part of building a company.

Why new companies need to do the early work

When you are new, you do not just lack content. You lack:

- A consistent voice

- Lived examples

- Battle-tested opinions

If you outsource that too early to AI, you become a company with premature opinions. Or no real opinions at all. Your writing feels like it was written by a committee of ghosts.

I do not think this means new companies cannot use AI. But I think there is a sequence that matters:

- Write the early canon yourself

- Build your pile of receipts

- Then let AI multiply it

Skipping steps one and two is how you end up with a content library that sounds professional but says nothing.

Same thing is happening with code

In software, we are all learning that typing code is not the hard part anymore. The hard part is: what to build, why, and what tradeoffs you accept.

I still struggle with this personally. I like writing code. I like the craft of it and the language I mostly work in (Ruby).

But the leverage moved upstream. The value is in the decisions, not the keystrokes.

I think writing for work is heading the same way. The craft is still there. But the leverage moved upstream to strategy, context, taste, and editing.

The companies that already have years of decisions documented are starting from a better position. They have the strategy and context already. AI just helps them execute faster.

New companies have to build that foundation first. There is no shortcut.

How you know something's off

One of the most useful tells I have found:

If AI-assisted writing suddenly gets worse, it is usually not "the model got dumb."

It is that your inputs got messy. Stale docs. Contradictions between different context files. Generic positioning that does not actually say anything. Missing examples.

A key tell that something is wrong with your instructions or context layer is lower output quality in AI-assisted writing.

When that happens, the fix is not a better prompt. The fix is going back to your context files and cleaning them up. Removing contradictions. Adding fresh examples. Making the positioning specific enough to actually guide behavior.

This is maintenance work. It is not glamorous. But it is what separates companies that get value from AI from companies that just generate slop faster.

Proofreading matters more now

Since AI makes producing drafts cheap, proofreading and editing become a real advantage.

This is something I'm quite cognizant of. My brother was diagnosed with dyslexia as an adult, and I suspect I am not far behind him based on my reading speed.

If you cannot or will not read carefully, AI makes it easy to publish errors confidently. The output looks polished. It reads smoothly. But the substance might be wrong, or generic, or contradictory with something you said last month.

Editing is not just about catching typos. It is about catching the places where AI averaged you out. The places where it said something reasonable instead of something true. The places where it sounds like writing but does not sound like you.

That takes careful reading. Which turns out to be a skill worth developing.

Where this leaves us

This is not "AI is bad."

It is: AI shows you pretty clearly what you have already built.

Established companies get a quiet benefit. They already have the raw material for a voice. The receipts are sitting in old docs and support threads and product decisions. They just need to make that material accessible to AI.

New companies can absolutely win too. But they need to:

- Write the early canon themselves

- Build their pile of receipts

- And only then let AI multiply it

The companies that skip those steps end up with content that sounds like everyone else. Which, in a world where everyone has access to the same models, is the same as having no content at all.

If you are thinking about how to organize context for AI, we built Context Link to solve retrieving context once you have it. You can also see the Github repo we use for our own content workflow in this GitHub repo (although it's heavily personalized)